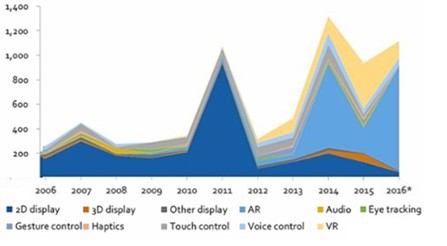

Major tech corporations have also been reasonably active making acquisitions, albeit with a preference for earlier stage companies to bolster their technology portfolios and talent pools. Intel (Omek and Indisys), Apple (VocalIQ), Google (Flutter), Freescale Semicondutor (Cognivue), and Facebook-owned Oculus (Pebbles Interfaces) have all targeted HMI startups in sub-$100m transactions.

Applications of HMI in Industry 4.0

Lots of attention has been focused on the application of HMI technologies in consumer use cases like virtual assistants. Instead of repeating that message, we will focus on a few specific applications which are impacting industrial companies.

Industrial machine control is an obvious area of focus. The machinery utilized in industries ranging from oil & gas to healthcare is often plagued with archaic user interfaces which can reduce productivity on the machines which are commonly amongst the most expensive in the facilities where they’re located. Integrating state of the art HMI into these assets can create new efficiencies on many levels:

· Machine operators benefit from receiving physical feedback from haptic technologies, or as wearables or AR glasses free their hands and attention to focus on their primary task. New levels of safety are often achieved, which is far more important than efficiency ever will be.

· Maintenance professionals can quickly visualize machine status in real-time, enabling them to prioritize their workload and anticipate tooling and material requirements for individual tasks. Remote collaboration also allows off-site specialists to consult or guide local technicians through tasks that would require travel otherwise.

· Managers are empowered to quickly survey the status of all operations inside a given plant or facility and receive immediate notification when potential bottlenecks arise.

Across these categories, deployment of advanced HMI technology can help attract young people to join an aging workforce (a trend which is consistent with all Industry 4.0 tech).

The automotive sector has also become a rapid adopter of new HMI technology. Automakers all strive to deliver a differentiated UX that breeds demand for a given brand/model. New HMI technologies are most commonly found in the infotainment systems of high-end models, but the redesign of mundane features like door openers has also demonstrated their applicability.

Touch screens and gesture recognition technology have emerged as favorites for automakers. You’ve likely noticed that the touch screen in your car isn’t as sleek as the one on your smart phone. This is because automakers have favored resistive displays over the capacitive screens found on most phones. The long design cycles (7–10 years), the need for longevity (12 years, 200k miles), and the intense focus on cost all factor into a slow rate of adoption of new technology. However, suppliers like Continental are working to commercialize infrared screens. Infrared sensors are also being evaluated to monitor driver attentiveness in Advanced Driver-Assistance Systems.

Basic gesture recognition sensors are also being applied for tasks outside the car. Drivers approaching their parked Ford Escape with an armful of groceries can now open the tailgate by passing their foot underneath the rear bumper instead of setting down what they’re carrying.

Advertisers are also adopting new gesture recognition and eye-tracking technologies in Digital Out of Home (“DOOH”) advertising. Digital displays are more expensive to deploy, but have been shown to drive 2.5x more revenues per incremental dollar spent. The most advanced ad displays enable a wall-mounted advertisement to become a point of sale. In other cases, they can allow a viewer to access additional content related to the ad via an app download or launching a website from a QR code. Higher engagement combined with new analytics which enable advertisers to target precise groups of users have resulted in new revenue for both media companies and their customers alike.

Conclusion

Many of the use cases identified here may not feel like they’re on the cutting edge of technology in today’s internet age. However, the computerization of industry and manufacturing is one of the key theses underpinning the Industry 4.0 trend. As such, the integration of technologies being utilized in consumer electronics would drive a significant investment and productivity boom, and that’s to say nothing about the potential of state-of-the-art technologies currently in development.

The design and replacement cycles for the types of large physical assets we’ve covered are certainly longer than those in the consumer electronics industries. However, the highly capital-intensive nature of the industries that are being transformed means that dramatic improvements in cost structure and new revenue generated through operating efficiencies are possible.